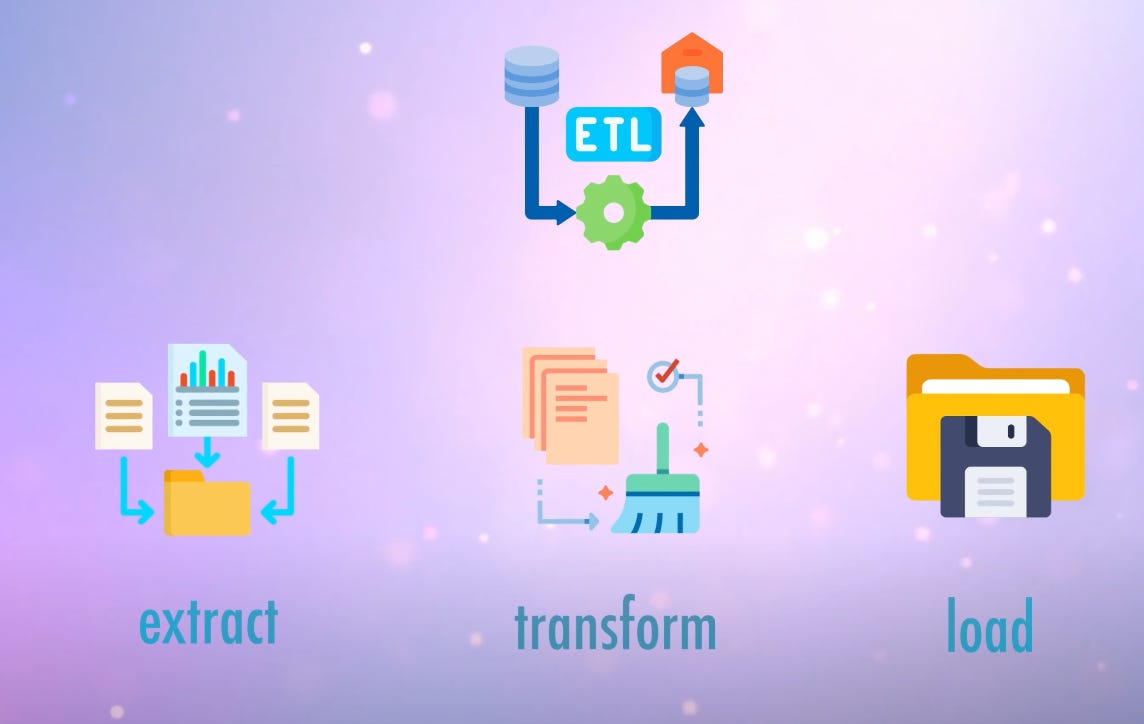

ETL is a fancy term used to gather, clean, organize, and save data into a system where you can access it later. The process involves gathering varying data sets from diverse sources and ranking them based on what you want to learn from the resulting datasets. Let's look at the first step in the ETL process.

Extract

This is the letter E in the ETL. Let's say you are a business owner. At the end of the month, you want to look at your sales report, inventory report, employee salary, and profit margins. So, you already have a challenge first to collect all of these reports on your desk so that you can look at them simultaneously. This is the extract step. Tools like Apache NiFi or custom-written programs in data engineering can bring data from external APIs, cloud object storage, data queues, or the old-fashioned FTP/SFTP servers. We are only collecting data from everywhere; we aren't doing anything else just yet. We will be covering Apache NiFi in our later videos. Please subscribe to take advantage of future videos.

Transform

This is the letter T in the ETL. At this step, we transform the collected data from the various sources in the extract step. Don't let the word transform scare you! It means we will explore the data, try to learn more, correct and combine the standard datasets, and eliminate data that makes no sense. Think of this as you assemble a final report based on the inputs you collected from the extract step. Typically, SQL queries are used to transform the incoming data. However, in the big data world, where there is an unlimited supply of data, more advanced tools such as Apache Spark transform data faster than regular SQL methods.

Load

This is the final and probably the most exciting step compared to the previous two. Load is the letter L in the ETL process. In this step, we save the transformed data and prepare to consume it. We select which database or search engine to store our transformed data. We can then query this data to draw insights and develop intelligence. We may also choose a fast-performing or slower database based on our reporting needs and cost limitations. Tools like AWS Glue or Azure Data Factory can transport the transformed data into systems like ElasticSearch, Apache Druid, Athena, or Hive to load data into our database or search systems.

So, depending on the complexity of your use case, ETL can be super easy or complex. As we explained earlier, separating your overall ETL process into individual E, T, and L processes is essential. This allows for faster troubleshooting and a more manageable approach when the ETL scope grows over time. The ETL is a foundation of the data world, and Python seems to be the number 1 choice for data engineers building the ETL process.

That's all for this post. Please like and subscribe.